Backtesting is the bedrock of quantitative trading. It is the necessary process of simulating a strategy against historical data to estimate its viability. However, relying on backtest results without rigorous validation often leads to the most dangerous outcome in trading: false confidence. A strategy that looks phenomenal on paper but fails spectacularly in real-time almost always suffers from one or more methodological flaws during the backtesting phase.

To ensure your strategy’s simulated success translates into real-world profits, you must identify and meticulously eliminate the most common backtesting mistakes that lead to false confidence. This article dives into the seven primary pitfalls and provides actionable techniques to safeguard the integrity of your research.

1. The Sin of Look-Ahead Bias

Look-ahead bias (or look-forward bias) is arguably the most fundamental error in backtesting. It occurs when your simulation incorporates future data that would not have been available to a real-time trader at the moment a decision was made.

Example: Using the closing price of a bar to determine the entry or exit for the trade initiated within that same bar, or calculating an indicator (like a moving average) using data points that occurred after the decision time.

How to Avoid Them:

The rule is absolute: At the precise moment of execution, your strategy must only rely on data points that occurred strictly before that moment. For strategies relying on intra-day data, use open, high, low, or midpoint prices for decision-making, ensuring the closing price is only used once the trade is closed or the next period begins.

2. Ignoring Data Quality and Survivor Bias

The quality of the input data dictates the quality of the output backtest. Using flawed or incomplete data introduces systemic errors that inflate performance metrics.

a) Survivor Bias: This affects equity index backtests. If you only include currently listed companies in your historical simulation, you ignore the stocks that failed, were delisted, or went bankrupt. Since only successful stocks “survived,” the strategy’s historical performance will appear unrealistically high.

b) Poor Tick Data: Low-quality or misaligned timestamp data, especially in high-frequency environments, can lead to inaccurate fill prices and unrealistic execution assumptions.

How to Avoid Them:

Invest in comprehensive, institutional-grade data feeds that include delisted securities (for index strategies) and ensure accurate timestamping for high-resolution tests. Data cleansing is critical; ensure data quality is the single most important factor in your backtesting process.

3. The Fatal Flaw of Over-Optimization (Curve Fitting)

Over-optimization, or curve fitting, is the act of tuning a strategy’s parameters so closely to the historical noise of the specific data set that the strategy perfectly describes the past but loses all predictive power for the future. This is the primary driver of false confidence.

Example: Adjusting the period of a Moving Average (MA) crossover from 20 to 21, then to 22, simply because 22 yielded a $100 higher net profit on the specific 5-year historical window tested. The strategy is now highly brittle and unlikely to adapt to changing market conditions.

How to Avoid Them:

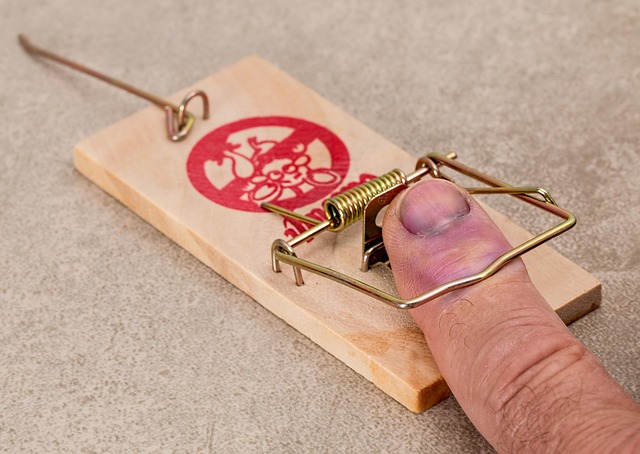

Use robustness checks. Strategies should only use the minimum number of parameters required. Once parameters are optimized, check performance stability by slightly varying them (e.g., if the optimal MA period is 50, test 45, 55, and 60). If performance drops drastically outside the optimal window, the strategy is curve-fitted. This behavioral issue is often known as The Psychological Trap of Over-Optimization.

4. Underestimating Transaction Costs and Slippage

One of the quickest ways a profitable backtest becomes a losing live strategy is the failure to accurately model real-world costs.

Transaction Costs include commissions, exchange fees, and regulatory charges. Slippage is the difference between the expected price of a trade and the price at which the trade is actually executed, often widening in volatile or illiquid markets.

Case Study: A high-frequency strategy relying on short-term mean reversion generates an annual profit of 200,000 USD based on 10,000 trades, assuming zero slippage. If the true average slippage per trade is just 0.05% (5 basis points), that strategy loses 50% of its profitability to costs, potentially making the live deployment non-viable.

How to Avoid Them:

Always model conservative, realistic costs. For strategies trading illiquid assets or high volumes, introduce a slippage buffer (e.g., 1-2 ticks) in your backtest environment. It is far better for the backtest to underestimate profitability slightly than to overestimate it drastically.

5. Failing the Out-of-Sample and Walk-Forward Test

The primary defense against curve fitting is rigorous out-of-sample (OOS) testing.

A typical backtest workflow involves using 100% of the historical data (In-Sample or IS) for both development and testing. This is equivalent to studying the exact exam you will be taking—you memorize the answers but don’t learn the material.

How to Avoid Them:

1. Data Split: Always partition your data into an IS period (used for parameter optimization) and an OOS period (used only for validation, never for optimization).

2. Walk-Forward Analysis: This is the gold standard for dynamic strategy testing. Walk-Forward Optimization periodically re-optimizes parameters on a moving IS window and then tests those parameters on the immediately following, fresh OOS period, simulating how a strategy would be managed in real-time.

6. Cherry-Picking Data Windows

Strategies often perform exceptionally well during specific market regimes (e.g., trend-following thrives in prolonged bull runs; mean-reversion thrives in sideways, choppy markets). Cherry-picking involves testing only the periods where the strategy naturally performs best.

How to Avoid Them:

Ensure your backtest spans multiple, distinct market regimes, including high volatility periods (like 2008 or early 2020), low volatility periods, major crashes, and sideways consolidation. A robust strategy must demonstrate resilience across various conditions, even if its performance in adverse regimes is merely flat or slightly negative. Using strategy filters based on time of day or volatility can enhance robustness during these diverse periods.

7. Prioritizing Net Profit Over Risk-Adjusted Metrics

A strategy showing $1 million in net profit might look fantastic, but if it required a maximum drawdown (MDD) of $900,000, it is fundamentally unstable. Focusing solely on total profit ignores the risk taken to achieve those returns, leading to a dangerous psychological reliance on absolute gain.

How to Avoid Them:

Move beyond simple profit/loss calculations. Your analysis must center on risk-adjusted metrics, which evaluate return relative to volatility and risk exposure. Key metrics include:

- Sharpe Ratio: Return adjusted for total volatility (risk).

- Sortino Ratio: Return adjusted only for downside volatility (bad risk).

- Maximum Drawdown (MDD): The largest peak-to-trough decline experienced.

- Calmar Ratio: Annualized return divided by the maximum drawdown.

A high Sharpe Ratio and a manageable MDD are far better indicators of a strategy’s potential success than raw net profit. Understanding these figures is essential for quantifying risk tolerance, as detailed in Essential Backtesting Metrics: Understanding Drawdown, Sharpe Ratio, and Profit Factor.

Conclusion: Building Confidence Through Rigor

False confidence generated by faulty backtesting is the number one reason promising trading careers end prematurely. By systematically addressing the 7 common backtesting mistakes—eliminating look-ahead bias, ensuring data integrity, rigorously managing optimization, modeling realistic costs, splitting data for OOS validation, testing across all regimes, and prioritizing risk metrics—you transition from performing simple historical simulations to conducting genuine quantitative research.

True confidence comes not from spectacular backtest equity curves, but from the statistical robustness proven by careful methodology. For a complete deep dive into all aspects of quantitative strategy validation, return to The Ultimate Guide to Backtesting Trading Strategies: Methodology, Metrics, and Optimization Techniques.

Frequently Asked Questions (FAQ)

Q1: What is the single biggest threat posed by Look-Ahead Bias?

Look-ahead bias artificially inflates historical returns by basing trade decisions on information that was chronologically unavailable. The biggest threat is that it provides a perfect equity curve that instantly collapses when deployed live because the market no longer provides the “future” information the strategy relied upon.

Q2: How can I differentiate between necessary optimization and harmful curve fitting?

Necessary optimization seeks parameter sets that show good performance across a range of inputs and remains profitable on fresh, out-of-sample data. Harmful curve fitting results in parameters that are hyper-specific to the tested dataset, often failing robustness checks where small parameter variations lead to catastrophic performance drops.

Q3: Why is Walk-Forward Optimization considered superior to a simple In-Sample/Out-of-Sample split?

While a simple split tests generalization, Walk-Forward Optimization (WFO) tests robustness over time. WFO simulates the reality of trading where parameters must be periodically reassessed and retuned as market regimes change, providing a more realistic assessment of a strategy’s long-term manageability.

Q4: If I am trading Bitcoin futures, how should I estimate slippage for my backtest?

For liquid assets like Bitcoin futures, slippage can be estimated based on historical average bid-ask spreads during trading hours, factoring in the intended volume of your trades. A conservative approach is to add a small fixed cost (e.g., 1-2 ticks) to every entry and exit, especially if your strategy is designed to execute quickly or at market price.

Q5: If my strategy shows a 50% profit but a 40% maximum drawdown, should I deploy it?

Generally, no. A 40% maximum drawdown (MDD) means you risked losing 40 cents for every 50 cents you gained, resulting in a very high-risk profile. Focus on the Calmar Ratio (Annual Return / MDD). A robust strategy usually strives for an MDD that is significantly smaller than the annual return, resulting in a Calmar Ratio well above 1.0.

Q6: Does Survivor Bias only affect stock index backtesting?

While it is most commonly associated with stock indices (where delisted companies are excluded), survivor bias can also appear in other asset classes, such as futures markets if highly illiquid or discontinued contracts are artificially excluded from the historical data pool.

Instant Backtests

We all have that one indicator we keep coming back to, but how do we know if it’s truly effective or just a comforting habit?

At QuantStrategy, we believe in validation through data.

That’s why we’ve built a library of backtests on foundational tools like the industry standard indicators.

Curious about the specific win rate, maximum drawdown, and overall performance of strategies on the 6000+ stocks?

We’ve done the heavy lifting.

Click here to explore the full backtest report and turn your market curiosity into a strategic edge.